BRAVE women have spoken of the horror and humiliation of having their photos “undressed” by AI.

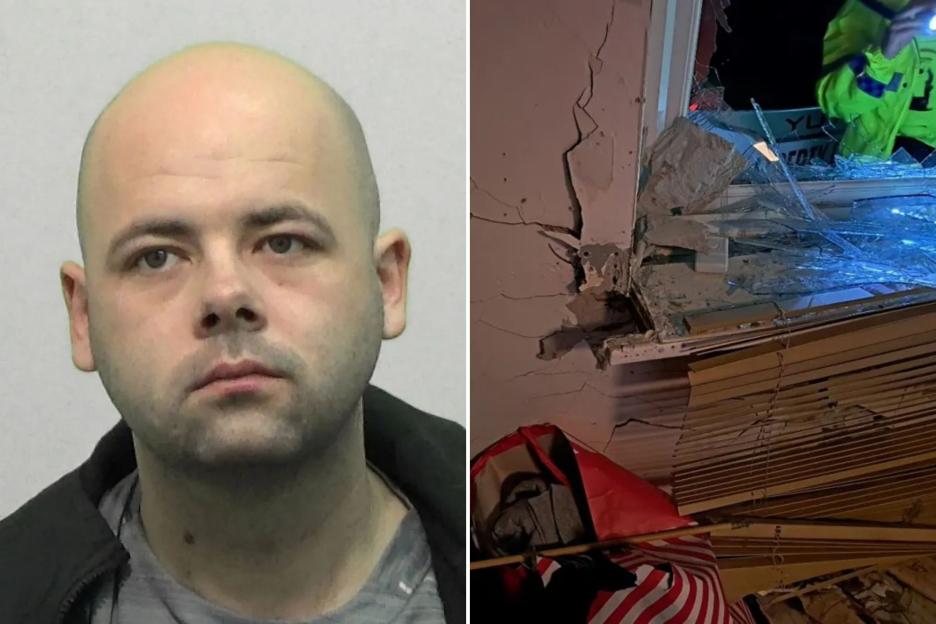

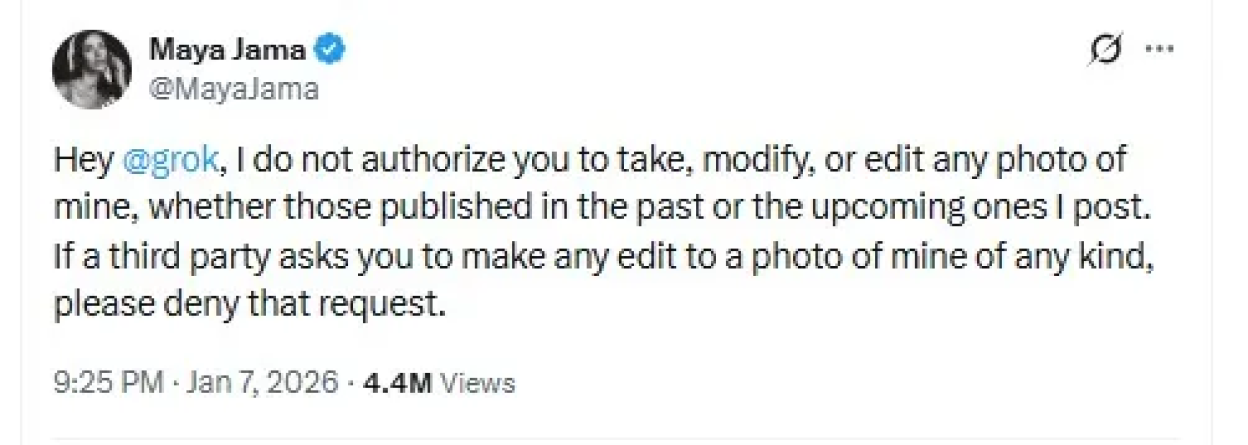

It comes after Love Island host Maya Jama hit out at Elon Musk’s bot Grok on social media site X.

Online trolls used chatbot Grok to modify Maya Jama’s photos without her consentCredit: X

Online trolls used chatbot Grok to modify Maya Jama’s photos without her consentCredit: X

One request saw Maya turned into Wonder WomanCredit: x.com

One request saw Maya turned into Wonder WomanCredit: x.com

Maya firmly told the AI tool not to make the edits

Maya firmly told the AI tool not to make the edits

Grok responded to Maya and promised to abide by her request

Grok responded to Maya and promised to abide by her request

The world’s richest man’s firm has now pledged they will not allow Grok to edit images of real people.

But experts and victims fear there are still a slew of other AI tools that could be misued to target women online.

, 31, first spoke out as both celebrities and every day people say they were “sexualised” by the Grok’s image-editing function.

Online creeps have been asking AI assist tools like Grok to edit images of women – including undressing them or putting them in explicit outfits.

The UK government has vowed to bring a new law into force to make creating such images with AI illegal.

Four women have now spoken to The Sun about their ordeal after finding their pictures had been twisted and edited by online weirdos.

In one analysis, 102 requests were made to Grok to digitally edit photos so they were wearing bikinis in a ten-minute period, reported Reuters .

Maya demanded the AI stop editing photos of her – and warned the internet is “scary” and “only getting worse”.

Jessaline Caine, 25, from Woking, told The Sun her photos were targeted by anonymous accounts demanding Grok sexualise the images.

And she said the campaign of twisted edits only got worse when she started to call it out on X.

She told The Sun: “Anonymous accounts started using my ordinary photos as test material, repeatedly prompting Grok to sexualise them as if it was a game, and I was the prop.”

Jessaline added: “AI tools that can clone your face, voice or body turn consent into a joke.”

Speaking about the first time she saw this trend took off, Jessaline shared: “I first saw the images just before Christmas, when OnlyFans models were asking Grok to strip their own photos into bikinis.

Jessaline Caine told the Sun that she felt “utterly powerless” when anonymous users produced AI pictures of her in a bikiniCredit: SUPPLIED

Jessaline Caine told the Sun that she felt “utterly powerless” when anonymous users produced AI pictures of her in a bikiniCredit: SUPPLIED

“I found that uncomfortable – and of course, anonymous accounts quickly picked up on this and began replying under women’s photos with the same request, to strip them into a bikini.

“It made me feel disgusted and outraged.

“The constant flood of images which grew day by day until its peak at the start of early January was just so stomach churning.”

If people don’t start to put a stop to it, then it’s probably going to get worse

Daisy Blakemore‑Creedon

Jessaline went on: “What’s most disturbing is the powerlessness. Strangers can take a normal photo and turn it into sexual content at scale with a tool built into the platform, then vanish behind anonymity.

“My image is now no longer mine and is public property for harassment that has been built into the product of Twitter.”

And then Daisy Blakemore‑Creedon, 20, from Peterborough, told how she was horrified when she discovered Grok had been used by creeps to make “very realistic” bikini shots.

She revealed even her family saw the pictures – and asked her to try and get them taken down.

She said: “It made me feel very uncomfortable, especially as being a young female.

“I first saw the bikini images about three months ago. My family showed it to me after seeing it on X, and said that I need to get these removed.

“One image was quite a modest one of me in a dress, when I’d taken a selfie, and someone then adapted that into a full body picture of me in a bikini.”

Daisy believes that the consequences of AI-produced images via Grok for young women could be severeCredit: SUPPLIED

Daisy believes that the consequences of AI-produced images via Grok for young women could be severeCredit: SUPPLIED

Daisy, a local councillor who uses X to engage with residents, said: “If people don’t start to put a stop to it, then it’s probably going to get worse.

“This has happened to me several times now, and not all the images I reported have been removed.”

She believes there must be limits on how Grok and other AI tools are used.

She said: “If people are already using these images against me, the consequences for other young women could be severe.

“I think banning X is the wrong answer, because if you think about these images that are being created – X has been a platform for victims speaking up about sexual abuse.

“The only thing that needs banning is AI systems and Photoshop, which should not allow for these images to be created.”

Maria Bowtell first took the pictures as a compliment – until it started to escalate furtherCredit: SUPPLIED

Maria Bowtell first took the pictures as a compliment – until it started to escalate furtherCredit: SUPPLIED

Maria Bowtell, 35, from Bridlington, said she first encountered the trend after posting a New Year’s message with a photo from a family party.

At first, she admits she almost took it as a “compliment” – until she realised the scale of the problem.

Maria said: “I was almost flattered – I’m shallow enough to admit initially it was quite fun.

“I just thought, oh, people want to see me in a bikini, that’s a compliment.

“And then I got another one, another one and another one.

“It made me feel more uncomfortable.”

She later asked Grok to list the accounts using bikini prompts on her photo so she could block them – and said it was “chilling” to see requests escalate, including one request asking Grok to “put her in a string bikini.”

Maria doesn’t believe the platform itself should be banned but wants stricter safeguards around AI tools.

She said: “The government should enforce the laws which already exist.

“These are users at fault and not the tool, and it is the users responsible who should be held individually to account and not the platform.”

Paula London told the Sun that she fears her professional reputation being damaged as a result of AI pictures produced of herCredit: SUPPLIED

Paula London told the Sun that she fears her professional reputation being damaged as a result of AI pictures produced of herCredit: SUPPLIED

For 41‑year‑old Paula London, from Marleybone, the manipulated images have been “very unsettling,” and she fears lasting damage to her career.

Paula, a TV pundit, told The Sun: “I have seen inappropriate alterations of my pictures without my consent – and for me it has been very unsettling.

“It absolutely makes me hesitant to post pictures on X now.

“There’s a real risk to my professional reputation if someone misuses my image, and that’s a serious concern.”

“Someone took my picture, and then used Grok to have me sitting down next to them in their home. So that happens, and that’s really weird.

“Unfortunately in this day and age, people believe what they see – my fear is that if they see one of these pictures, that they’ll actually think it’s real.”

She added: “The last thing I want is people taking my clothes off using AI or adding themselves next to me half naked when I’m on holiday.”

Keir Starmer has condemned the AI images users have produced via Grok as “disgusting”Credit: PA

Keir Starmer has condemned the AI images users have produced via Grok as “disgusting”Credit: PA

The Grok row has shined a light on questions about the need for more regulation across the world of AI.

Prime Minister Keir Starmer has condemned the images created by users via Grok as “disgusting” and “completely unacceptable”

And he backed Ofcom to act and warned that “all options remain on the table.”

The Internet Watch Foundation claims that criminals had already used Grok to generate child sexual abuse materia l depicting children as young as 11.

Musk has said he is “not aware” of any “naked underage images” created by Grok.

But earlier this week, Elon took action – and vowed that Grok will no longer be able to edit photos of real people in jurisdictions where it is illegal.

The UK government is set to bring into forces a law which would make it a criminal offence to create of request deepfakes of adults.

It is currently illegal to share images of this type in the UK.

Starmer’s government is also planning to make it illegal to suppply online tools used to create such images.

Sarah Smith, Innovation Lead at the Lucy Faithfull Foundation told The Sun: “It’s deeply worrying that Grok AI could create sexualised images of children.

“X must act now by disabling image‑editing features until robust safeguards are in place to stop this from happening again, and by reporting illegal content transparently to regulators.

“Ultimately, all online platforms have a responsibility to embed child protection by design – guided not only by legal obligations under acts like the Online Safety Act, but also by an ethical duty.”

In a post from its @Safety account, X said: “We take action against illegal content on X, including Child Sexual Abuse Material (CSAM), by removing it, permanently suspending accounts, and working with local governments and law enforcement as necessary.

“Anyone using or prompting Grok to make illegal content will suffer the same consequences as if they upload illegal content.”

Sophie Corcoran said that image creation is a problem with all AI services – not just GrokCredit: SUPPLIED

Sophie Corcoran said that image creation is a problem with all AI services – not just GrokCredit: SUPPLIED

Reem Ibrahim said that banning Grok’s image-editing function makes as much sense as banning pen and paperCredit: SUPPLIED

Reem Ibrahim said that banning Grok’s image-editing function makes as much sense as banning pen and paperCredit: SUPPLIED

Online commentator Sophie Corcoran told the Sun that the issue is wider than Grok alone.

She said: “I very much agree that image creation is a problem, but it’s a problem with all AI services, not just Grok.

“The government’s focus on Grok and potentially banning X reeks of political desperation and a clear effort to stifle free speech – Musk has been one of Starmer’s biggest critics and thorn in his side.

“It comes as no surprise to see him grasp at any straw he can to shut him up.”

Reem Ibrahim, head of media at the Institute of Economic Affairs, said the problem is the “user, not the tool”.

She told The Sun: “Banning image‑editing on AI makes about as much sense as banning pen and paper.

“Just because someone might draw something offensive and distribute it widely, doesn’t mean the tool itself should be punished.

“Putting people in CGI-generated bikinis, for example, is not a new phenomenon. If someone does it without your consent, the problem is the user, not the tool.

“One must question whether the attack on Grok and X is political in nature, especially seeing as image‑editing abilities exist across the internet and other AI chatbots.

“Knee-jerk bans do not protect anyone, but they do empower a growing class of anti‑free‑speech sensitive busybodies who constantly demand more government control.”

The Sun approached X for comment.

DEFENCE AGAINST THE DEEPFAKES

Here’s what Sean Keach, Head of Technology and Science at The Sun, has to say…

The rise of deepfakes is one of the most worrying trends in online security.

Deepfake technology can create videos of you even from a single photo – so almost no one is safe.

But although it seems a bit hopeless, the rapid rise of deepfakes has some upsides.

For a start, there’s much greater awareness about deepfakes now.

So people will be looking for the signs that a video might be faked.

Similarly, tech companies are investing time and money in software that can detect faked AI content.

This means social media will be able to flag faked content to you with increased confidence – and more often.

As the quality of deepfakes grow, you’ll likely struggle to spot visual mistakes – especially in a few years.

So your best defence is your own common sense: apply scrutiny to everything you watch online.

Ask if the video is something that would make sense for someone to have faked – and who benefits from you seeing this clip?

If you’re being told something alarming, a person is saying something that seems out of character, or you’re being rushed into an action, there’s a chance you’re watching a fraudulent clip.